Security and Privacy in AI/ML Systems

A practical, in-depth guide to understanding and safeguarding artificial intelligence and machine learning systems in the modern world.

- Security Risks

- Privacy Solutions

- ML Lifecycle

- Real-world Examples

1. Introduction

Artificial Intelligence (AI) and Machine Learning (ML) systems are revolutionizing industries, from healthcare to finance to transport. But as organizations increasingly rely on these technologies, issues surrounding the security and privacy of data and models have become critical.

This comprehensive guide will explore the risks, real-world attacks, mitigation strategies, and privacy-preserving approaches that every AI/ML practitioner, data scientist, or product owner should know. We will explain complex ideas in easy language with practical examples, and use the now-famous Samsung–ChatGPT incident as our running case study.

2. Security & Privacy Challenges in AI/ML

AI/ML systems introduce new security and privacy risks compared to traditional software. Why? Because they rely on vast datasets—often using sensitive personal or corporate data—and use complex mathematical models that can be tricked, probed, or even reverse-engineered.

Key Challenges:

- Collecting, storing, and using sensitive data—sometimes without explicit consent

- Growing sophistication of adversarial attacks against ML models

- Difficulty in ensuring data anonymization and preventing data leakage

- Complex regulatory environment around privacy and AI ethics

- Model interpretability: Explaining how an AI/ML decision was reached is often hard

Example Scenarios:

- A hospital trains an AI to predict heart attacks from patient records. If privacy isn’t enforced, a data breach could expose personal health information.

- A bank uses ML for fraud detection. If adversaries understand the model, they could design attacks to evade detection.

Fast Fact:

According to Stanford University's HAI Privacy Study, privacy is consistently one of the public's top concerns in AI deployment, especially with rapid advances like large language models (LLMs).

3. Common Vulnerabilities and Threats in AI/ML Systems

AI/ML systems face unique attack vectors and failure modes. Here are the most frequent and dangerous, with illustrations where possible.

Input Manipulation

Attackers craft input data to trick an AI model into malfunctioning. For example, adversarial images can cause an AI vision system to incorrectly identify objects by changing only a handful of pixels.

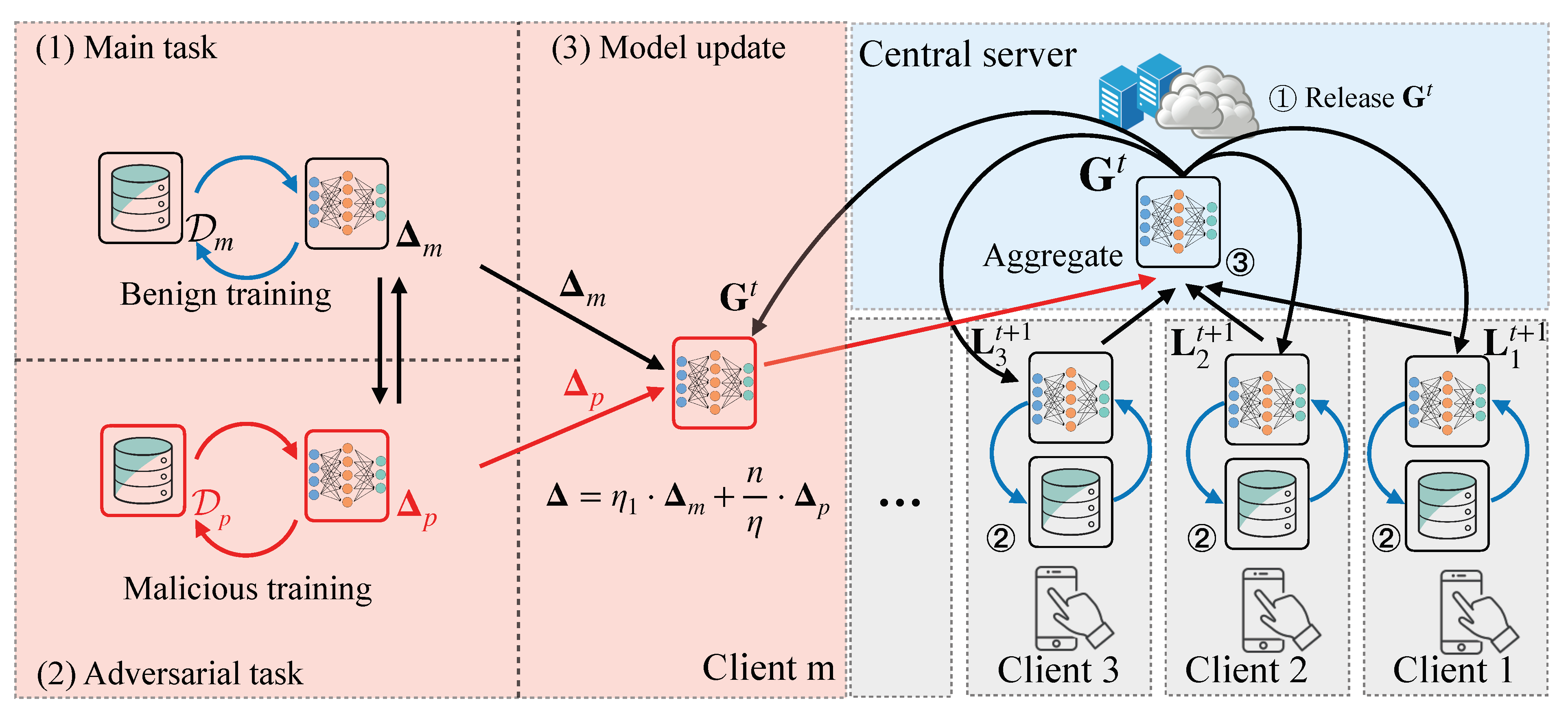

Data Poisoning

Malicious actors deliberately inject "bad" or biased examples into the AI/ML training dataset, corrupting the model’s behavior. This is especially risky when ML models train on crowd-sourced or externally gathered data.

Model Inversion & Privacy Leakage

Attackers can use repeated probing queries to reverse-engineer private training data from a model. For example, if a fraud detection model is exposed via an API, an attacker could query it until they reconstruct sensitive patterns present in the data.

Model Extraction & Intellectual Property Theft

Given enough API access, adversaries can copy the logic or weights of your proprietary AI model (known as model theft), eroding your competitive advantage.

Prompt Injection (in LLMs like ChatGPT)

For generative models, attackers can insert specially crafted prompts to force unintended outputs, expose confidential context, or bypass filters.

Major Types of AI/ML Security Attacks

4. Case Study: Samsung-ChatGPT Data Leak

Incident Overview

In early 2023, Samsung engineers seeking to improve code productivity began feeding sensitive source code and meeting details to ChatGPT, an AI chatbot running on OpenAI's servers (Forbes Report).

- The entered code snippets were sent to OpenAI’s infrastructure with no extra company-side controls.

- OpenAI reserves rights to user-submitted data for model improvement unless opted out.

- Soon after, Samsung realized its confidential source code could be inadvertently stored outside its protected network.

- Result: Samsung banned ChatGPT for internal use and reviewed all generative AI use cases.

Key Lessons from the Incident:

- Prompt-based attacks and data privacy risks apply directly to SaaS (cloud) AI models.

- Even well-meaning employees may leak sensitive information through AI-assisted workflows.

- AI vendors might legally retain submitted data unless explicit contractual or technical barriers exist.

- Security guardrails and clear organizational AI policies are vital.

In sum: AI security is not just about technology—it is about people, processes, and trust.

5. Best Practices for Securing AI/ML Systems

| Risk | Best Practice |

|---|---|

| Sensitive Data Exposure | Encrypt data at rest/in transit; employ data minimization; use privacy-preserving ML (see below) |

| Input Manipulation/Adversarial Attack | Validate and sanitize user inputs; adversarial training for robustness |

| Model Theft | Limit API access and rate; monitor for anomalous usage; watermark models |

| Data/Model Poisoning | Restrict data sources; use supervised data curation, outlier detection, and robust aggregators (esp. in federated learning) |

| Privacy Leakage | Employ differential privacy, federated learning, and regular privacy audits |

| Prompt Injection (LLMs) | Implement input filtering, output monitoring, red teaming, and user education |

Essential Security Principles

- Least Privilege: Limit system and data access to only those who require it.

- Zero Trust: Always authenticate and verify, especially across network boundaries.

- Explainability-by-Design: Use transparent models where possible to ease detection of manipulation.

AI Security Frameworks

6. Privacy-Preserving Techniques in AI/ML

Differential Privacy

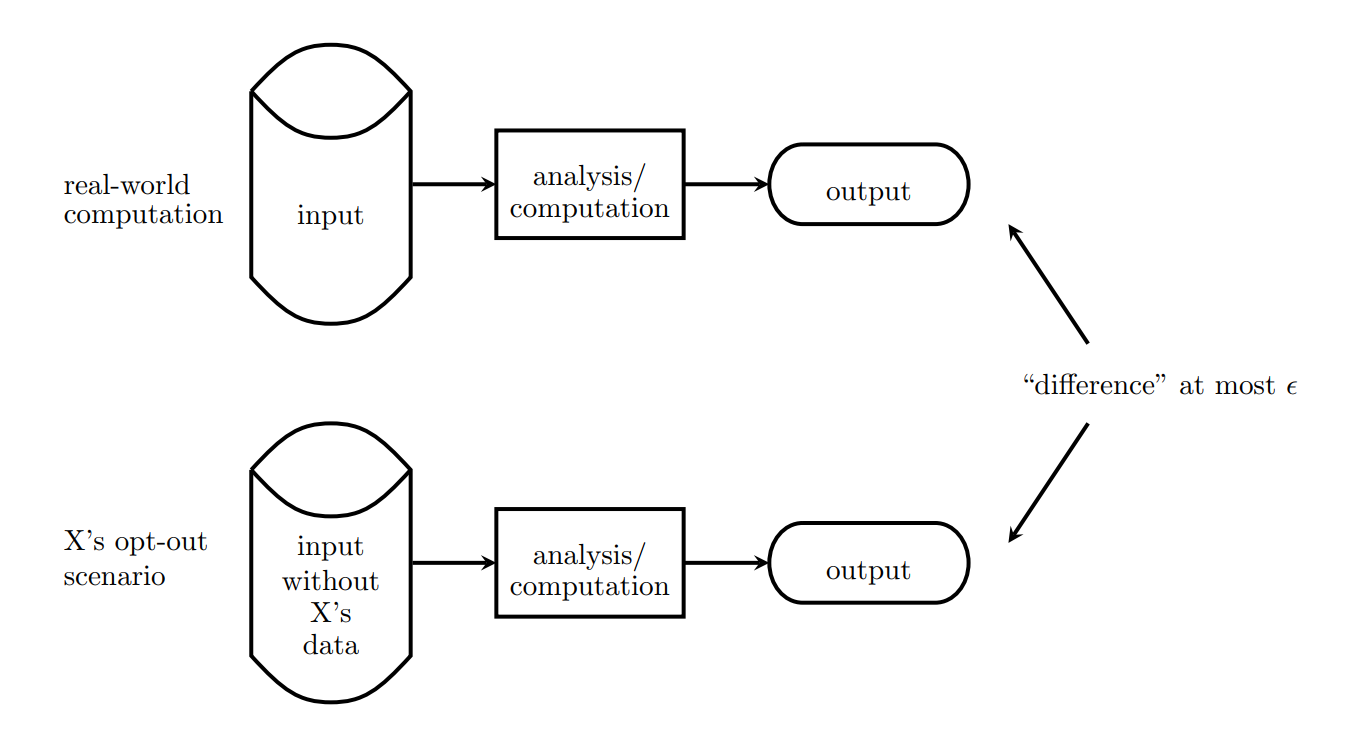

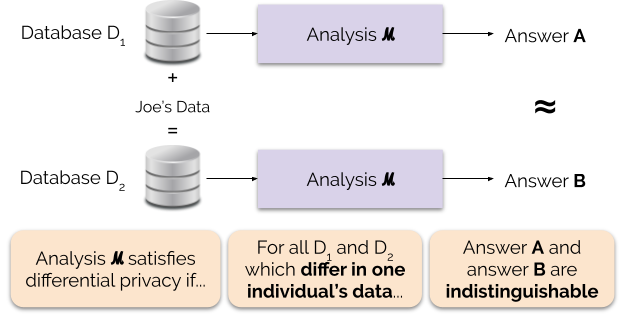

Differential Privacy (DP) is a mathematical framework ensuring the output of a computation does not betray whether any individual's data was in the dataset.

- Protects against privacy leakage from aggregate queries or ML training

- Ensures "plausible deniability" for each data subject

- Widely used by Apple, Google, Microsoft, and the US Census Bureau

How it works (simple example):

Suppose you want to query average salary from a database, but want to ensure no single person's salary can be inferred. The DP mechanism adds statistical "noise" to the result, making it impossible to tell whether any individual contributed to the answer.

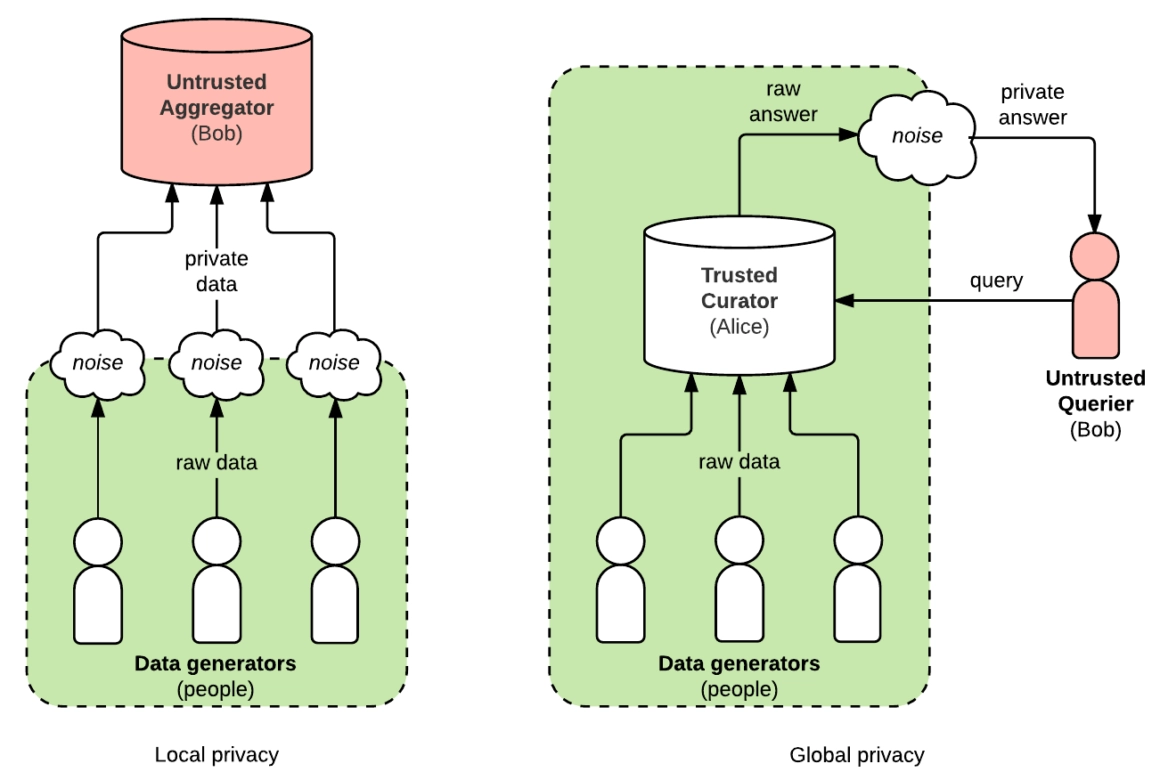

Federated Learning

Federated Learning enables collaborative ML model training across many users or organizations without exchanging raw data. Only model updates/gradients are shared, keeping original data local.

- Used in mobile keyboards (Gboard, SwiftKey), medical research, financial services

- Helps mitigate legal/regulatory constraints by design

- Still needs additional security to protect against model update attacks

Other Privacy-Preserving Techniques

- Homomorphic Encryption: Enables computations on encrypted data

- Secure Multiparty Computation: Allows parties to jointly compute results without revealing inputs

- Anonymization (with caveats): Remove identifying info prior to training, though re-identification is risky

- Data Watermarking: Track data leaks via uniquely embedded patterns

7. Regulatory Compliance Considerations

Organizations deploying AI/ML must comply with data protection and privacy regulations, including:

- GDPR (EU) – Data minimization, explicit consent, explainability, and data subject rights

- CCPA (California) – Right to know, delete, and opt-out from data collection

- AI Act (EU, pending) – Mandates risk categorization, transparency, and auditing for high-risk AI

- Industry standards (NIST SP 800-53, ISO/IEC 27001)

Tip: ML teams must work with legal/compliance to document design choices, audit training data, and build records supporting accountability and traceability.

8. Securing the AI/ML Lifecycle

Securing an AI or ML solution is not a one-off task. It is an ongoing process across the entire lifecycle: from design/planning, to development, deployment, and maintenance.

Lifecycle Stages & Security Steps

- Design: Identify privacy/security requirements, threat modeling, cross-functional review

- Development: Use secure coding & ML frameworks, reproducibility, test for adversarial vulnerabilities, robust data management

- Deployment: Secure model endpoints, implement logging and continuous monitoring, rollback capability

- Operation: Prompt patching, red-teaming, periodic audits, and user training against social engineering

9. Future Directions & Recommendations

- Adopt "privacy by design": Embed privacy safeguards at every stage, not as an afterthought.

- Use explainable AI (XAI): Increasing transparency aids in risk detection and regulatory compliance.

- Continuously monitor for adversarial trends: Attacks are evolving; red team your AI like you would ethical-hack your network.

- Invest in staff education: Many AI accidents are caused by accidental disclosure or poor understanding of risks.

- Automate security testing: Tools that scan for poisoning, evasion, or model leakage should be integrated with your ML pipeline.

- Participate in industry groups: Standards and best practices evolve—engage with communities like OWASP and NIST.

10. For Further Reading

- OWASP Machine Learning Security Top 10

- NIST: Managing Cybersecurity and Privacy Risks in AI

- IBM: AI Privacy Issues

- Transcend: Examining Privacy Risks in AI Systems

- Forbes: Samsung bans ChatGPT after leaked code incident

- Google: Differential Privacy & ML Models

- OpenMined: Use Cases of Differential Privacy

- The MLSecOps Top 10 Vulnerabilities

- Stanford HAI: Privacy in an AI Era

- Scalefocus: AI and Privacy

إرسال تعليق